a blog by Peter Leonard

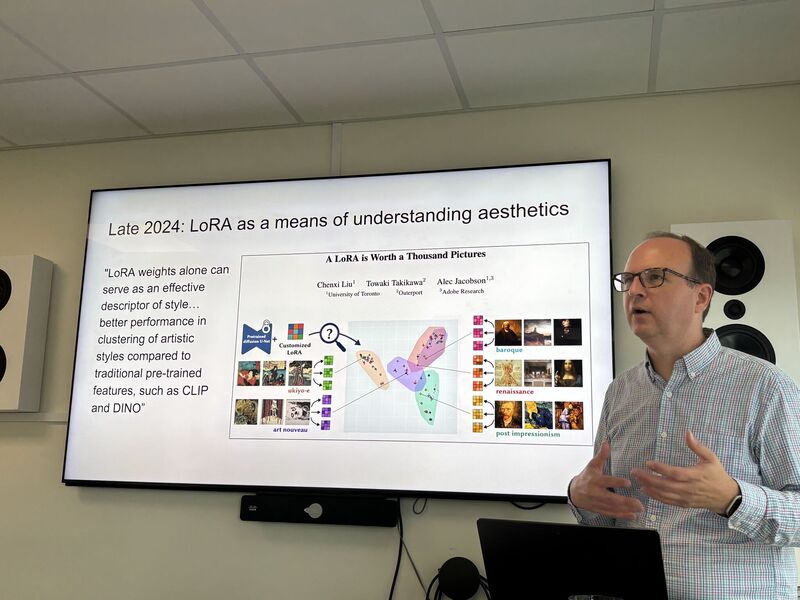

At Aarhus University, I had a chance to present some preliminary work on training Low-Rank Adaptations of diffusion models to emulate the style of 19th century painting. I’ve written up some of my notes, including the synthetic Golden Age images themselves.

At Aarhus University, I had a chance to present some preliminary work on training Low-Rank Adaptations of diffusion models to emulate the style of 19th century painting. I’ve written up some of my notes, including the synthetic Golden Age images themselves.

That writeup will hopefully explain how (and why) we get to the image on the the right: