Although it sometimes seems like developments in large language models have sucked all the air out of any other discussions, the attention paid to commercial systems such as ChatGPT has the side benefit of encouraging a lot of interesting open-source approaches.

The idea of asking a commercial, closed model such as ChatGPT or GPT4 for its wisdom on specialized topic seems less interesting to me than marrying the inferential logic with a set of controlled, curated facts — a set of documents that you know the origin and the contents of.

This is the idea behind PrivateGPT, one of a number of projects that have sprung up on GitHub to allow us to make two important moves:

1) Use an open-source, as opposed to commercial, large language model as a base layer.

2) Connect that underlying layer with a smaller set of documents, and restrict answers to that specialized subcorpora.

Like many other projects, PrivateGPT uses langchain to allow querying of a smaller dataset.

As an example, I have 34,000 articles written between 1987 and 1997 about Apple and the market for Macintosh computers. This includes software reviews, news about new computers, the stock price, and competitors such as Microsoft. With these articles connected to a 13-billion parameter model such as Vicuna, we can ask relatively sophisticated questions such as:

What are some popular painting programs for the Macintosh?

Some popular painting programs for the Macintosh include Claris Corp.’s MacPaint, Electronic Arts Inc.’s Studio/32, Fractal Design Corp.’s Painter and ColorStudio, SuperMac Technology’s PixelPaint Professional, and Delta Tao Software Inc.’s Pulse.

These answers are actually real, and more importantly the are “correct” for the time period of the late 1980s (a pre-Photoshop era when pixel-based programs in the tradition of MacPaint were most popular.) Perhaps most importantly, however, PrivateGPT can “cite its work” by showing which documents from my archive contributed to its reasoning:

> source_documents/1992-08-03_MacWeek_A12432500.txt:

To say that paint programs for the Macintosh have come a long way

in the past few years would be an understatement. They’ve come a very

long way from the simple elegance of Claris Corp.’s MacPaint, which

introduced us to painting with pixels in black and white, to the

mind-boggling sophistication of programs such as Electronic Arts Inc.’s

Studio/32, Fractal Design Corp.’s Painter and ColorStudio, SuperMac

Technology’s PixelPaint Professional, Delta Tao Software Inc.’s

> source_documents/1989-01-31_MacWeek_A7293981.txt:

Studio/8. It is rare that we encounter a program that tries to

improve upon the Macintosh interface — and works. Usually these attempts

backfire, but Studio/8 is an exception. Studio/8 is also a powerful paint

program combining many paint options and special effects not found elsewhere.

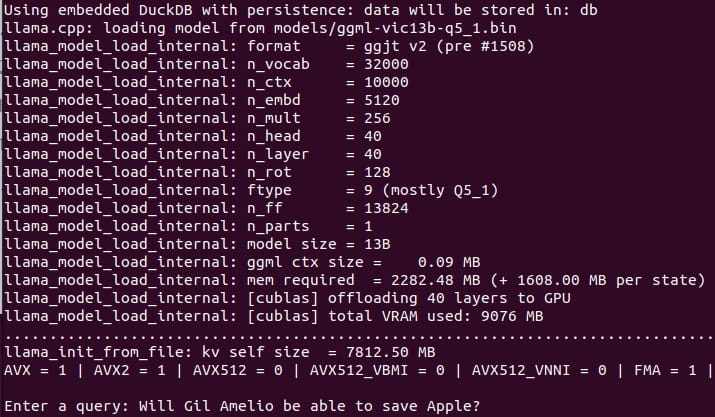

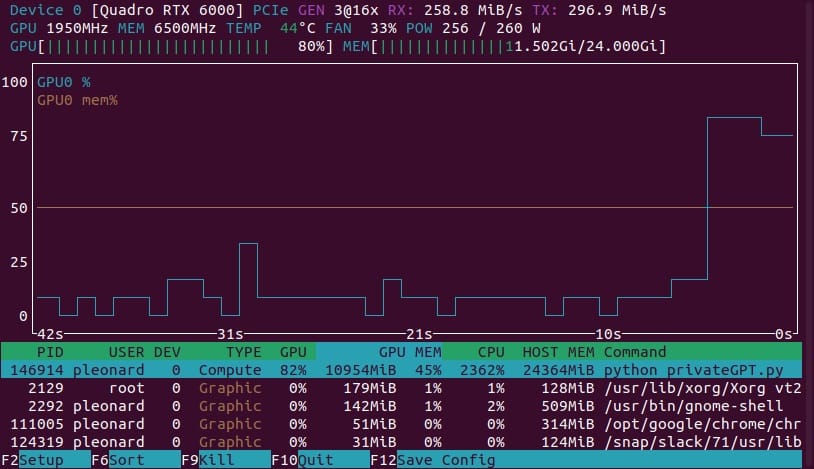

The 40 layers of the 13B parameter Vicuna model use over 9 gigabytes of VRAM on my workstation’s Nvidia card — this is the orange line in the graph below. Each time I execute a query, the blue line rises, representing the GPU utilization:

This, together with the fact you can remove the ethernet cable when a query is run and it still works, is what differs from using ChatGPT via OpenAI’s web interface or API: all local computation, and all data stays on your machine.

The effectiveness (and accuracy) of this kind of local use of large language models is of course going to depend on the underlying LLM model you choose, as well as the amount of source data you have available in your private corpus. But I’ve been impressed with the results, especially in cases such as this:

What are the advantages of PageMaker over Quark XPress?

The main advantage of PageMaker over Quark XPress is its free-form approach to page layout, as opposed to XPress’ frame-based placement system. Additionally, PageMaker has historically been known for its ease of integration with other software and the new version’s Edit Original command takes advantage of System 7’s interapplication communications. However, QuarkXPress has a large number of third-party extensions available which PageMaker lacks.

And finally, here’s evidence that the model is not “breaking free” of the sources I’m giving it:

Will Apple’s new CEO, Gil Amelio, be able to save the company?

It is not possible to predict with certainty whether or not Gil Amelio will be able to save Apple. However, he seems to have a good track record of turning around struggling companies and has been credited with taking National Semiconductor from the brink of economic ruin to profitability. Additionally, his focus on long-term strategies rather than short-term profits may bode well for Apple’s future.

Amelio did not, of course, end up saving the company — he was ejected by Steve Jobs in a boardroom coup. Knowing this would require a local corpus that extended beyond 1997, and so the answer given is actually exactly what we want: a ChatGPT clone restricted to only the facts in front of it, neither regurgitating Wikipedia nor hallucinating an answer it cannot know.