What does a Thai restaurant have in common with a scholarly article? Not much, but emerging standards for machine-readable semantic content online can help both reach more people. Publishers of academic reviews can take advantage of new techniques to surface quality content. And these new technical standards can help a core value of the academy — peer review — maintain visibility.

One of my side projects is a database of nearly 300 reviews of journal articles, books and festschrifts about the Norwegian playwright Henrik Ibsen, called the Survey of Articles. This online resource is based on many years’ work by the Ibsen Society of America, an international scholarly society. Over the course of decades, leading academics have read, reviewed and critiqued articles about Ibsen in many different journals and languages. As Ibsen is one of the most ‘international’ figures in Scandinavian literature, putting these critiques online helps researchers all over the world. And though the Society had placed PDF’s online previously, we felt that chunking everything up into a database would provide more granular ways of digging into the data. But if we built it, would anybody come?

It turns out that search engines such as Google are embracing technical markup known as “microformats” to help solve problems like this. Try this query as an example of microformats in action: Thai Restaurant. If you’re near a city with Thai food (and if Google can determine your position through your IP address), you’re likely to see a mix of things including a map and one or two reviews like this:

How does Google get those four stars, the number of reviews, etc, for this particular restaurant? And how does Yelp! communicate these pieces of data back to the search engine? Through machine-readable markup — by including a kind of secret code for web spiders.

Google supports three different kinds of this technical markup: RDFA, the hReview microformat, and Microdata. For a technical overview of these three options, see Philip Jägenstedt’s analysis here. Jägenstedt comes to the conclusion that they all have their flaws (“Microformats, you’re a class attribute kludge”) but that Microdata held the most promise for the future. Seeing as his article was written 18 months ago, I decided to implement Microdata, the newest of the triptych. (I had had some experience using hReview, before, and found it cumbersome to express cleanly in my HTML.)

So now the next step was figuring out how to implement Microdata in my existing database-driven HTML. Google maintains a Tips and Tricks page for how to do this. Their example (bear with me here) is a pizza restaurant:

Here’s where some human value judgements ensured. There’s no way that a critical review of an academic work can be broken down into a “5-star” rating. Even though Google allows for other types of quantitative evaluation — such as Thumbs Up/Thumbs Down, 100-point scales, etc — none of these systems map in any way to what the Society is trying to accomplish by putting these Critical Annotations online. So obviously I jettisoned the Star-Rating aspect of the pizza example. In addition, I only wanted to note the year an article was reviewed, rather than the specific month and day (which is pretty meaningless for an annual publication.) Finally, I didn’t want to further whittle down the Annotation into a one-sentance ‘summary’, despite the existence of that feature in the Microdata standard. With these adjustments in mind, I decided to express the data I had available through the following snippet of HTML and PHP:

The next step was to test whether Google could parse my embedded Microdata and recognize each page as a review. For this, I used the Rich Snippets Testing Tool. This system will ingest a web page and, in real time, expose the semantic markup it finds — along with any errors. After a bit of debugging and re-arranging the nesting and position of the various elements, I got the code to do what I wanted. Here’s an example of a successfully-parsed page:

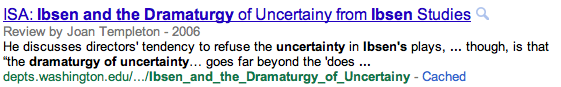

Finally, and most importantly, you’ll need to submit your site to Google for review. It took my site a few weeks to go through — I don’t know the frequency with which Google looks at these, but it’s possible there’s a periodic review. You’ll know your site has made it through when search results start exposing the additional metadata your markup is indicating. For my content, the result was this:

Here’s a mapping of the information contained in the Microdata markup to the results page of a Google search:

There are a few things to point out here.

First, although the title of the article is crucial to Google’s system picking up on the review, that title is not actually exposed in the search result. Instead, title of the page itself is displayed. This makes it important that your TITLE tags are accurate and informative. In this case, I settled on the following template:

Name of Site: Article Title from Journal Title

In this particular case, that works out to

ISA: Ibsen and the Dramaturgy of Uncertainy from Ibsen Studies

I could go further with this and include the author of the article in the title, but at a certain point you start running into concerns about the length of page titles as exposed in bookmarking interfaces and similar tools.

Secondly, although we are marking up the review itself with itemprop=”description” tags, Google doesn’t necessarily return the start of that text in its summary on the results page. The instructions on the Rich Snippets Testing Tool explain this fact: “The reason we can’t show text from your webpage is because the text depends on the query the user types.” Indeed, as the above screen shot shows, the actual returned text depends on the keyword terms that a user feeds into the query box. Still, having this itemprop is crucial to Google parsing your content as an actual review, so don’t leave it out.

Finally, even though we left out the stars or any other system of quantitative measurement, Google still identified our page as a review and returned it as one of the top results (the actual first result, at least as of this writing and in this particular test case.) The name of the reviewer and the year in which the review was written were presented alongside the result, hopefully encouraging a user to click through to this critical evaluation. This, I think, is proof that a system originally designed for Thai restaurant reviews can be appropriated and used in a way that helps academic web surfers find quality content. As the web gets more crowded with more and more data, it’s incumbent on people in the academy to ensure their content is marked-up in ways which helps people looking for serious research.